Research Methods in Psychology: Empirical Basis and Experimental Designs

Document from University about Research Methods. The Pdf explores research methods in psychology, including empirical bases, experimental and quasi-experimental designs, and observational methods. This Pdf is useful for university students of Psychology.

See more23 Pages

Unlock the full PDF for free

Sign up to get full access to the document and start transforming it with AI.

Preview

RESEARCH METHODS

UNIT 1. EMPIRICAL BASIS OF RESEARCH. KNOWLEDGE GENERATION

- Psychology and science.

- Scientific method.

- Theories, laws and hypothesis.

- Hypothetic-deductive method.

- Scientific research.

- Quantitative vs. qualitative approaches

- Levels of evidence

UNIT 4.EXPERIMENTAL METHODS

- Demonstrating cause and effect

- Independent sample designs

- Within-subjects designs

- Factorial designs

- Single-subject experiments

- Validity in experiments

UNIT 6. SELECTIVE, CORRELATIONAL METHODS. Ex post facto designs

- Ex post facto studies

- Retrospective studies.

- Prospective studies.

- Developmental designs.

- Validity in ex post facto designs.

UNIT 8. OBSERVATIONAL METHODS

- Overview

- Sampling behaviour

- Observational methods

- Recording behaviour

- Analysis of observational data

- Thinking critically about observational research

UNIT 3. MEASUREMENT IN PSYCHOLOGY. Quantitative research requirements

- Variables.

- Psychological constructs.

- Scales of measurement.

- Reliability.

- Validity.

- Samples.

- Size effect

UNIT 5. PRE-EXPERIMENTALS AND QUASI-EXPERIMENTAL METHODS

- Pre-experiments

- Quasi-experiments.

- The Nonequivalent Control Group Design.

- Time-Series Designs.

UNIT 7. QUALITATIVE APPROACHES IN PSYCHOLOGY

- Overview

- Phases

- Methods

- Validity and reliability

UNIT 9. HOW TO DISSEMINATE THE RESULTS OF AN INVESTIGATION

- Research reports

- General guidelines

- APA-style report

- Presenting a paper

- Presenting a poster

EMPIRICAL BASIS OF RESEARCH AND KNOWLEDGE GENERATION

PSYCHOLOGY AND SCIENCE

Science is a system of thought. A rational explanation of how things work in the world and a process of getting closer to truths and urther from myths, fables and unquestioned or 'intuitive' ideas about people. A body of knowledge, particularly that which has resulted from the systematic application of the scientific method. Characteristics of science

- Falsifiability: There must be some empirical test that allow researchers to show that a particular idea is either true or false.

- . Objectivity: A reliance on evidence that at least two observers can independently verify.

- · Replicability: Reproducing a set of findings

- . Self-correction: Science corrects any errors or faulty conclusions that emerged from previous research.

- . Systematic: It tends to approach problems in a methodical way.

Pseudoscience: Claims presented so that they appear scientific even though they lack supporting evidence and plausibility. · Characteristics:

- - Scientific appearance: "psychobabble"

- - Tolerating inconsistencies

- - Absence of peer review

- - Anecdotal evidence

- - Absence of rigorous testing

- - Supernatural explanations

- - Appeals to authority

- - Grandiose claims

- - Stagnation

Critical thinking: refers to a more careful style of forming and evaluating knowledge than simply using intuition. In addition to the scientific method, critical thinking will help us develop more effective and accurate ways to figure out what makes people do, think, and feel the things they do. Scientific Attitude

- · Curiosity: always asking new questions.

- ○ Hypothesis: Curiosity, if not guided by caution, can lead to the death of felines and perhaps humans.

- . Skepticism: Like curiosity, it generates questions. Not accepting a 'fact' as true without challenging it; seeing if 'facts' can withstand attempts to disprove them.

- . Humility: seeking the truth rather than trying to be right; a scientist needs to be able to accept being wrong.

- . Critical thinking: analyzing information to decide whether it makes sense, rather than simply accepting it.

- ○ Goal: getting at the truth, even if it means putting aside your own ideas.

- ○ How:

- ■ Look for hidden: assumptions (and decide if you agree), bias, politics, values, or personal connections.

Put aside your own assumptions and biases, and look at the evidence. ■ Consider if there are other possible explanations for the facts or results. ■ See if there was a flaw in how the information was collected.

SCIENTIFIC METHOD

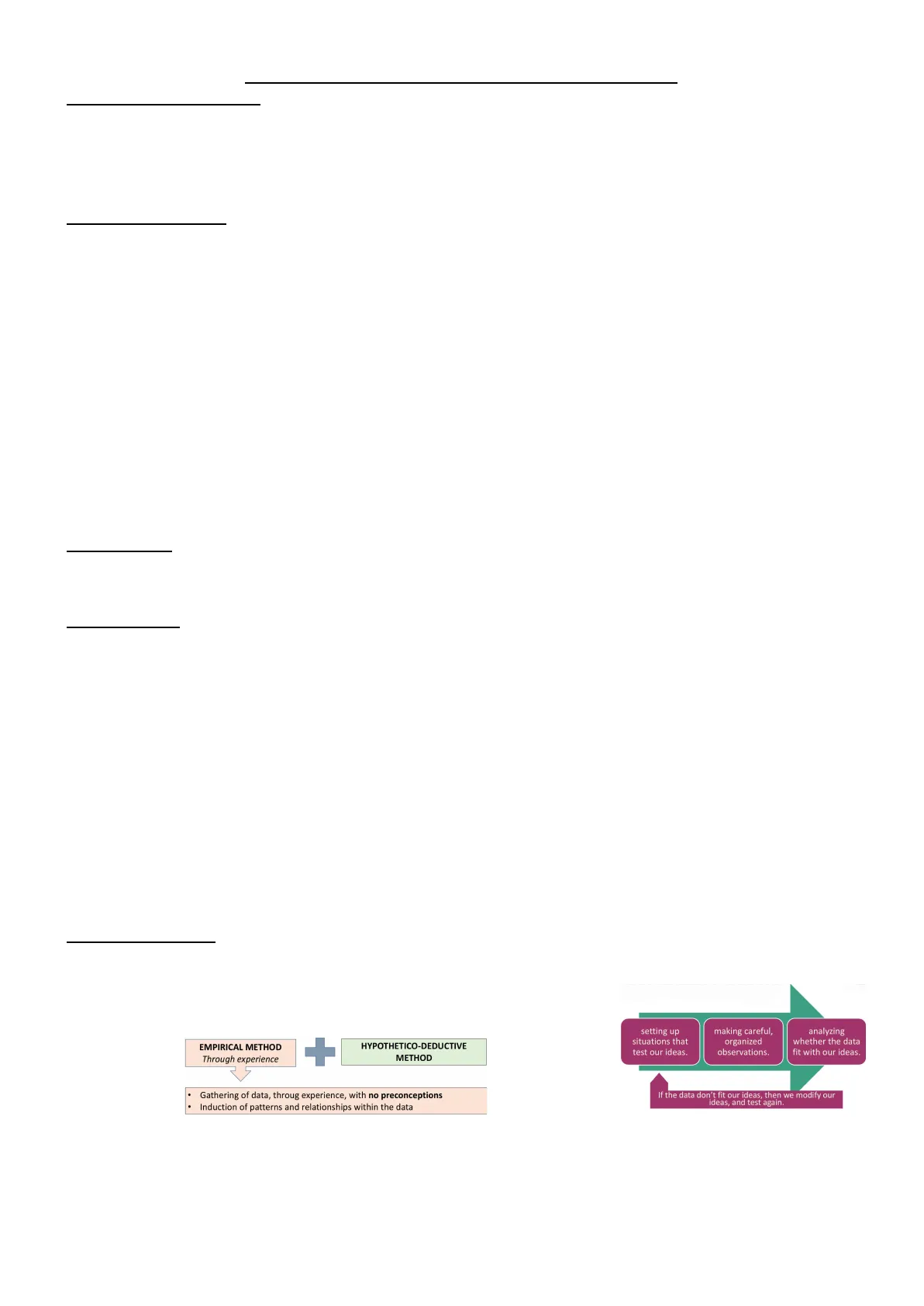

Science is a way of thinking that leads us towards testable explanations of what we observe in the world around us. Scientific research does not prove theories true, it exposes different explanations. The scientific method is the process of testing our ideas about the world by: EMPIRICAL METHOD Through experience + HYPOTHETICO-DEDUCTIVE METHOD · Gathering of data, throug experience, with no preconceptions Induction of patterns and relationships within the data setting up situations that test our ideas. making careful, organized observations. analyzing whether the data fit with our ideas. If the data don't fit our ideas, then we modify our ideas, and test again.

THEORIES, LAWS AND HYPOTHESIS

Laws: a general principle that applies to all situations. There are few universally accepted laws within the behavioral sciences. Theory: in science, is a set of principles, built on observations and other verifiable facts, that explains some phenomenon and predicts its future behavior. Hypothesis: a testable prediction consistent with our theory. "Testable" means that the hypothesis is stated in a way that we could make observations to find out if it is true.

HYPOTHETIC-DEDUCTIVE METHOD

- Observation: Carry out tests of relatively short-term memory using Word lists of varying lengths, gathering and ordering data.

- Induction of generalizations or laws: When people are given a list of 20 words and asked to 'free recall or 'laws' them' (in any order) as soon as the list has been presented they tend to recall the last four or five words better than the rest - known as a recency effect.

- Development of explanatory theories: Suggestion that we have a short-term memory store (STS) of about theories 30 seconds' duration and a long-term store (LTS) and that list items have to be rehearsed in the ST 'buffer' if they are to be transferred to the LTS.

- Deduction of hypothesis to test theory: If it is true that items are rehearsed in the buffer then people might test the

theory by emptying that buffer first when asked to recall a list and therefore producing the recency effect.

- Hypothesis: Recency effect is caused by early emptying of the rehearsal buffer.

- Test hypothesis. Develop research prediction: Have several research participants attempt to free recall a word list. research prediction, Prevent them from starting recall for 30 seconds after presentation of the list by having them perform an unrelated distraction task.

- Results of test provide support for or challenge to theory: If the recency effect disappears then the rehearsal buffer emptying support for or challenge hypothesis is supported and hence, in turn, the general ST/LT theory. If not then some other explanation of the recency effect is required.

SCIENTIFIC RESEARCH

Variables: the things that alter, and whose changes we can measure Samples: the people we are going to study or work with -> PARTICIPANTS . They are taken from the POPULATION. Design: overall structure and strategy of the research study . Resources . Nature of research aim · Previous research . Researcher's attitude Analysis: design and measurement will have a direct effect on the analysis.

QUANTITATIVE vs. QUALITATIVE APPROACHES

Quantitative: Non-numerical data. Qualitative: Numerical data.

LEVELS OF EVIDENCE

Levels of evidence are based on the methodological quality of the study, validity and applicability Level . Evidence from a systematic review or meta-analysis of all relevant Randomized controlled trial or evidence-based clinical practice guidelines Types of resources Level II . Evidence obtained from at least one well-designed RCT Level III . Evidence obtained from well-designed controlled trials without randomization TRIP Database searches these simultaneously Critically-Appraised Topics [Evidence Syntheses] FILTERED INFORMATION Critically-Appraised Individual Articles [Article Synopses] Level V . Evidence from systematic reviews of descriptive and qualitative studies Randomized Controlled Trials (RCTs) Cohort Studies UNFILTERED INFORMATION Level VI . Evidence from a single descriptive or qualitative study Level VII . Evidence from the opinion of authorities and/or reports of expert committees quality of evidence Systematic Reviews Level IV . Evidence from well-desgined case-control or cohort studies Case-Controlled Studies Case Series / Reports Background Information / Expert Opinion

KEY IDEAS IN RESEARCH

- Psychological researchers generally follow a scientific approach, developed from the 'empirical method' into the 'hypothetico-deductive method'.

- Most people use the rudimentary logic of scientific theory testing quite often in their everyday lives.

- Although scientific thinking is a careful extension of common-sense thinking, common sense on its own can lead to false assumptions.

- Claims about the world must always be supported by evidence.

- Good research is replicable; theories are clearly explained and falsifiable.

- Theories in science and in psychology are not 'proven' true but are supported or challenged by research evidence.

- Scientific research is a continuous and social activity, involving promotion and checking of ideas among colleagues.

- Research has to be planned carefully, with attention to design, variables, samples and subsequent data analysis. If all these areas are not thoroughly planned, results may be ambiguous or useless.

- Some researchers have strong objections to the use of traditional quantitative scientific methods in the study of persons. They support qualitative methods and data gathering, dealing with meaningful verbal data rather than exact measurement and statistical summary.

MEASUREMENT IN PSYCHOLOGY. Quantitative research requirements

VARIABLES

Variables: is anything that varies. They are observable or hypothetical events that can change and whose changes can be measured in some way. Dependent variable (DV): variable in the study whose changes depend on the manipulation of the independent ● variable. . We do not know the values of the DV until after we have manipulated the IV. . Independent variable (IV): variable which is manipulated by the experimenter. Defined in terms of LEVELS. . We know the values of the IV before we start the experiment. Manipulation of the independent variable Temperature change produces Change in the dependent variable Number of aggressive story endings

PSYCHOLOGICAL CONSTRUCTS

Construct: Concept that is measurable but not directly observable. They must be carefully explained and measured (measurement must be precise and clear). construct depression depression inventory teachers observations clinical review operationalization An operational definition of a construct gives us the set of activities required to measure.

SCALES OF MEASUREMENT

NOMINAL · Categories only · Numerals represent a rank order ORDINAL · Distance between subsequent numerals may not be equal INTERVAL · Subsequent numerals represent equal distances · Differences but no natural zero point RATIO · Numerals represent equal distances · Differences and a natural zero point

RELIABILITY

Measurement errors: discrepancies between the actual value of the variable being measured and the value obtained through a measurement tool or procedure. They may affect the reproducibility of outcomes across studies. Two types: · Systematic measurement errors are known as bias, which function as extraneous variables. Are consistent and predictable inaccuracies that occur in the same direction every time a measurement is taken. ● Random errors do not contribute to systematic differences between groups. Are unpredictable and occur without any consistent pattern. Reliability: Refers to how consistent the results of a measure are. In other words, if you repeat a study or a measurement, you should get the same results. ● Test-retest: refers to the stability of a measure over time, consistent scores every time we test. Is used to assess the reliability or stability of a measurement tool over time. It determines whether a test produces similar results when administered to the same group of people at two different points in time (so long as nothing significant has happened to them between testings). (A) 10 . Interrater: examines the consistency between different individuals (raters) who are evaluating or observing the same behavior or phenomenon, consistent scores no matter who is rating. . Internal: Looks at the consistency within the measurement itself, consistent scores no matter 2 4 6 8 10 Observer Mark's ratings how you ask. It ensures that all parts of a test or survey measure the same concept and produce similar results (not to be confused with internal validity!). The extent to which multiple measures, or items, are all answered the same by the same set of people. Relevant for measures that use more than one item to get at the same construct. ○ Cronbach's alpha: An average of all of the possible item-total correlations. Reliability: Do you get consistent scores every time? Test-retest reliability: People get consistent scores every time they take the test. Internal reliability: People give consistent scores on every item of a questionnaire. 5 Interrater reliability: Two coders" ratings of a set of targets are consistent with each other. 10 Jackie Observer Matt's ratings Observer Peter's ratings Jay Jackie 2 2 4 6 8 Observer Mark's ratings 10 Error sistemático Error aleatorio Figura 3- Lon ersare alsatarian varian an magnitud y dirección. Al contrario, fax errores siatereáticos tienden a ser consistentes. 6 Researchers start by stating a definition of their construct, to define the conceptual variable and get to the operational definition.